Using Agents

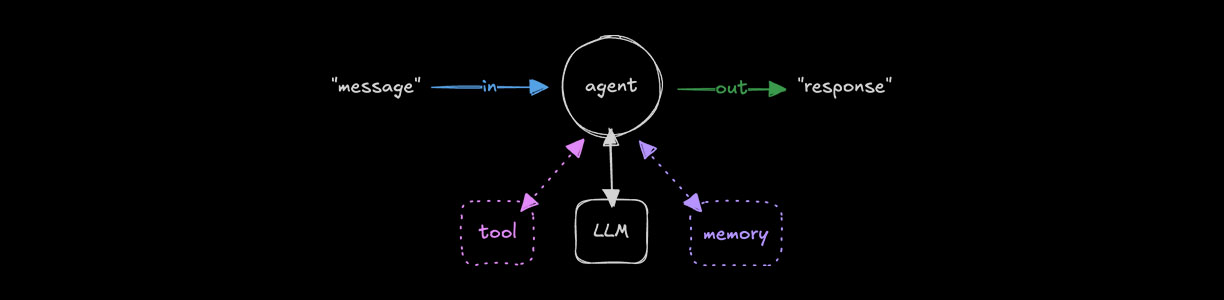

Agents use LLMs and tools to solve open-ended tasks. They reason about goals, decide which tools to use, retain conversation memory, and iterate internally until the model emits a final answer or an optional stop condition is met. Agents produce structured responses you can render in your UI or process programmatically. Use agents directly or compose them into workflows or agent networks.

An introduction to agents, and how they compare to workflows on YouTube (7 minutes)

Setting up agentsDirect link to Setting up agents

InstallationDirect link to Installation

Add the Mastra core package to your project:

- npm

- pnpm

- Yarn

- Bun

npm install @mastra/core@latestpnpm add @mastra/core@latestyarn add @mastra/core@latestbun add @mastra/core@latestMastra's model router auto-detects environment variables for your chosen provider. For OpenAI, set

OPENAI_API_KEY:.envOPENAI_API_KEY=<your-api-key>noteMastra supports more than 600 models. Choose from the full list.

Create an agent by instantiating the

Agentclass with systeminstructionsand amodel:src/mastra/agents/test-agent.tsimport { Agent } from "@mastra/core/agent";

export const testAgent = new Agent({

id: "test-agent",

name: "Test Agent",

instructions: "You are a helpful assistant.",

model: "openai/gpt-5.1",

});

Instruction formatsDirect link to Instruction formats

Instructions define the agent's behavior, personality, and capabilities. They are system-level prompts that establish the agent's core identity and expertise.

Instructions can be provided in multiple formats for greater flexibility. The examples below illustrate the supported shapes:

// String (most common)

instructions: "You are a helpful assistant.";

// Array of strings

instructions: [

"You are a helpful assistant.",

"Always be polite.",

"Provide detailed answers.",

];

// Array of system messages

instructions: [

{ role: "system", content: "You are a helpful assistant." },

{ role: "system", content: "You have expertise in TypeScript." },

];

Provider-specific optionsDirect link to Provider-specific options

Each model provider also enables a few different options, including prompt caching and configuring reasoning. We provide a providerOptions flag to manage these. You can set providerOptions on the instruction level to set different caching strategy per system instruction/prompt.

// With provider-specific options (e.g., caching, reasoning)

instructions: {

role: "system",

content:

"You are an expert code reviewer. Analyze code for bugs, performance issues, and best practices.",

providerOptions: {

openai: { reasoningEffort: "high" }, // OpenAI's reasoning models

anthropic: { cacheControl: { type: "ephemeral" } } // Anthropic's prompt caching

}

}

Visit Agent reference for more information.

Dynamic instructionsDirect link to Dynamic instructions

Instructions can be provided as an async function, allowing you to resolve prompts at runtime. This enables patterns like personalizing instructions based on user context, fetching prompts from external registry services, and running A/B tests with different variants.

See Dynamic instructions for examples.

Registering an agentDirect link to Registering an agent

Register your agent in the Mastra instance to make it available throughout your application. Once registered, it can be called from workflows, tools, or other agents, and has access to shared resources such as memory, logging, and observability features:

import { Mastra } from "@mastra/core";

import { testAgent } from "./agents/test-agent";

export const mastra = new Mastra({

agents: { testAgent },

});

Registering subagentsDirect link to Registering subagents

You can register subagents within an agent. They'll be executed as tools from the parent agent. Read the using agents as tools documentation to learn more.

Registering subworkflowsDirect link to Registering subworkflows

You can register subworkflows within an agent. They'll be executed as tools from the parent agent. Read the using workflows as tools documentation to learn more.

Referencing an agentDirect link to Referencing an agent

You can call agents from workflow steps, tools, the Mastra Client, or the command line. Get a reference by calling .getAgent() on your mastra or mastraClient instance, depending on your setup:

const testAgent = mastra.getAgent("testAgent");

mastra.getAgent() is preferred over a direct import, since it provides access to the Mastra instance configuration (logger, telemetry, storage, registered agents, and vector stores).

Generating responsesDirect link to Generating responses

Agents can return results in two ways: generating the full output before returning it or streaming tokens in real time. Choose the approach that fits your use case: generate for short, internal responses or debugging, and stream to deliver pixels to end users as quickly as possible.

- Generate

- Stream

Pass a single string for simple prompts, an array of strings when providing multiple pieces of context, or an array of message objects with role and content.

(The role defines the speaker for each message. Typical roles are user for human input, assistant for agent responses, and system for instructions.)

const response = await testAgent.generate([

{ role: "user", content: "Help me organize my day" },

{ role: "user", content: "My day starts at 9am and finishes at 5.30pm" },

{ role: "user", content: "I take lunch between 12:30 and 13:30" },

{

role: "user",

content: "I have meetings Monday to Friday between 10:30 and 11:30",

},

]);

console.log(response.text);

Pass a single string for simple prompts, an array of strings when providing multiple pieces of context, or an array of message objects with role and content.

(The role defines the speaker for each message. Typical roles are user for human input, assistant for agent responses, and system for instructions.)

const stream = await testAgent.stream([

{ role: "user", content: "Help me organize my day" },

{ role: "user", content: "My day starts at 9am and finishes at 5.30pm" },

{ role: "user", content: "I take lunch between 12:30 and 13:30" },

{

role: "user",

content: "I have meetings Monday to Friday between 10:30 and 11:30",

},

]);

for await (const chunk of stream.textStream) {

process.stdout.write(chunk);

}

Completion using onFinish()

When streaming responses, the onFinish() callback runs after the LLM finishes generating its response and all tool executions are complete.

It provides the final text, execution steps, finishReason, token usage statistics, and other metadata useful for monitoring or logging.

const stream = await testAgent.stream("Help me organize my day", {

onFinish: ({ steps, text, finishReason, usage }) => {

console.log({ steps, text, finishReason, usage });

},

});

for await (const chunk of stream.textStream) {

process.stdout.write(chunk);

}

Visit .generate() or .stream() for more information.

Structured outputDirect link to Structured output

Agents can return structured, type-safe data using Zod or JSON Schema. The parsed result is available on response.object.

Visit Structured Output for more information.

Analyzing imagesDirect link to Analyzing images

Agents can analyze and describe images by processing both the visual content and any text within them. To enable image analysis, pass an object with type: 'image' and the image URL in the content array. You can combine image content with text prompts to guide the agent's analysis.

const response = await testAgent.generate([

{

role: "user",

content: [

{

type: "image",

image: "https://placebear.com/cache/395-205.jpg",

mimeType: "image/jpeg",

},

{

type: "text",

text: "Describe the image in detail, and extract all the text in the image.",

},

],

},

]);

console.log(response.text);

Using maxStepsDirect link to using-maxsteps

The maxSteps parameter controls the maximum number of sequential LLM calls an agent can make. Each step includes generating a response, executing any tool calls, and processing the result. Limiting steps helps prevent infinite loops, reduce latency, and control token usage for agents that use tools. The default is 1, but can be increased:

const response = await testAgent.generate("Help me organize my day", {

maxSteps: 10,

});

console.log(response.text);

Using onStepFinishDirect link to using-onstepfinish

You can monitor the progress of multi-step operations using the onStepFinish callback. This is useful for debugging or providing progress updates to users.

onStepFinish is only available when streaming or generating text without structured output.

const response = await testAgent.generate("Help me organize my day", {

onStepFinish: ({ text, toolCalls, toolResults, finishReason, usage }) => {

console.log({ text, toolCalls, toolResults, finishReason, usage });

},

});

Using toolsDirect link to Using tools

Agents can use tools to go beyond language generation, enabling structured interactions with external APIs and services. Tools allow agents to access data and perform clearly defined operations in a reliable, repeatable way.

export const testAgent = new Agent({

id: "test-agent",

name: "Test Agent",

tools: { testTool },

});

Visit Using Tools for more information.

Using RequestContextDirect link to using-requestcontext

Use RequestContext to access request-specific values. This lets you conditionally adjust behavior based on the context of the request.

export type UserTier = {

"user-tier": "enterprise" | "pro";

};

export const testAgent = new Agent({

id: "test-agent",

name: "Test Agent",

model: ({ requestContext }) => {

const userTier = requestContext.get("user-tier") as UserTier["user-tier"];

return userTier === "enterprise"

? "openai/gpt-5"

: "openai/gpt-4.1-nano";

},

});

See Request Context for more information.

For type-safe request context schema validation, see Schema Validation.

Testing with StudioDirect link to Testing with Studio

Use Studio to test agents with different messages, inspect tool calls and responses, and debug agent behavior.